Introduction

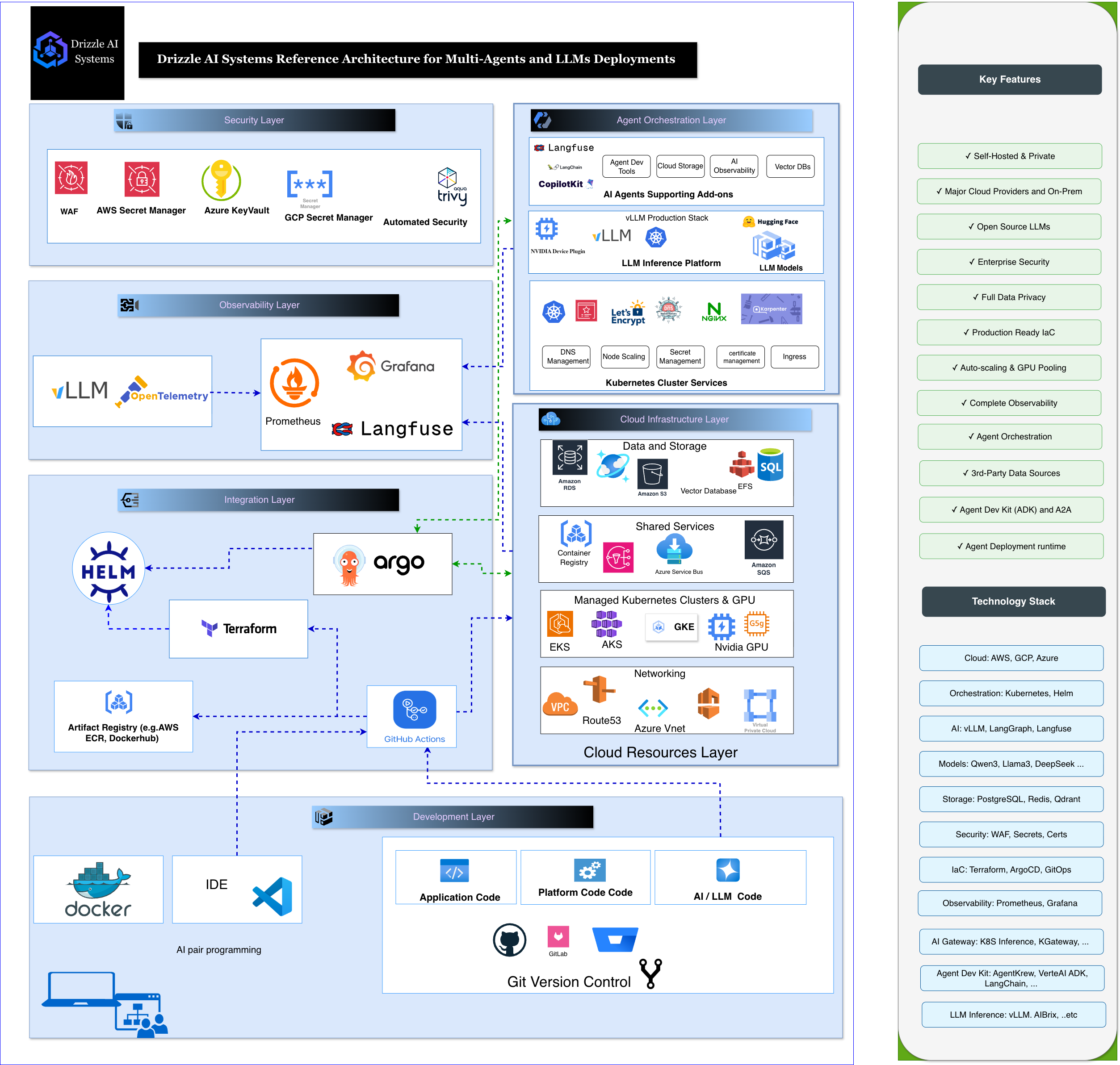

This document outlines the comprehensive architecture of the Drizzle AI Systems platform. It’s the result of years of hands-on experience building, deploying, and managing scalable, cloud-native AI infrastructure. Our framework is designed to provide a robust, secure, and observable foundation that you own entirely.

At Drizzle Systems, our mission is to eliminate the “AI Infrastructure Chasm” that stalls innovation. We do this by providing a production-grade, fully-owned AI Infrastructure in days, not months. The engine behind this is our pre-built blueprints, a comprehensive solution architecture designed for security, scalability, and speed.

This post will walk you through the key layers and components of our architecture, explaining how they work together to provide a robust foundation for your AI initiatives.

Core Architectural Principles

The Core Principle of our AI Infrastructure deployments: Secure, Scalable, and Fully owned by you!

Our architecture is not a black box. It’s a transparent, open-source solution deployed on your cloud account. From the Git repository to the cloud resources, you own everything.

Our Pillars

Our architecture is built on four foundational pillars:

- Unified Automation with IaC & GitOps: A single, auditable system for managing your entire platform.

- Optimized for LLM & Agent Serving: High-throughput, low-latency inference for any open-source / commercial model.

- Secure by Design: Essential security best practices are implemented from day one.

- Full-Stack Observability: Complete visibility into performance, cost, and security.

Architectural Layers

The Drizzle Systems platform is structured in logical layers, each building upon the last to create a cohesive, end-to-end solution.

The Core Architecture

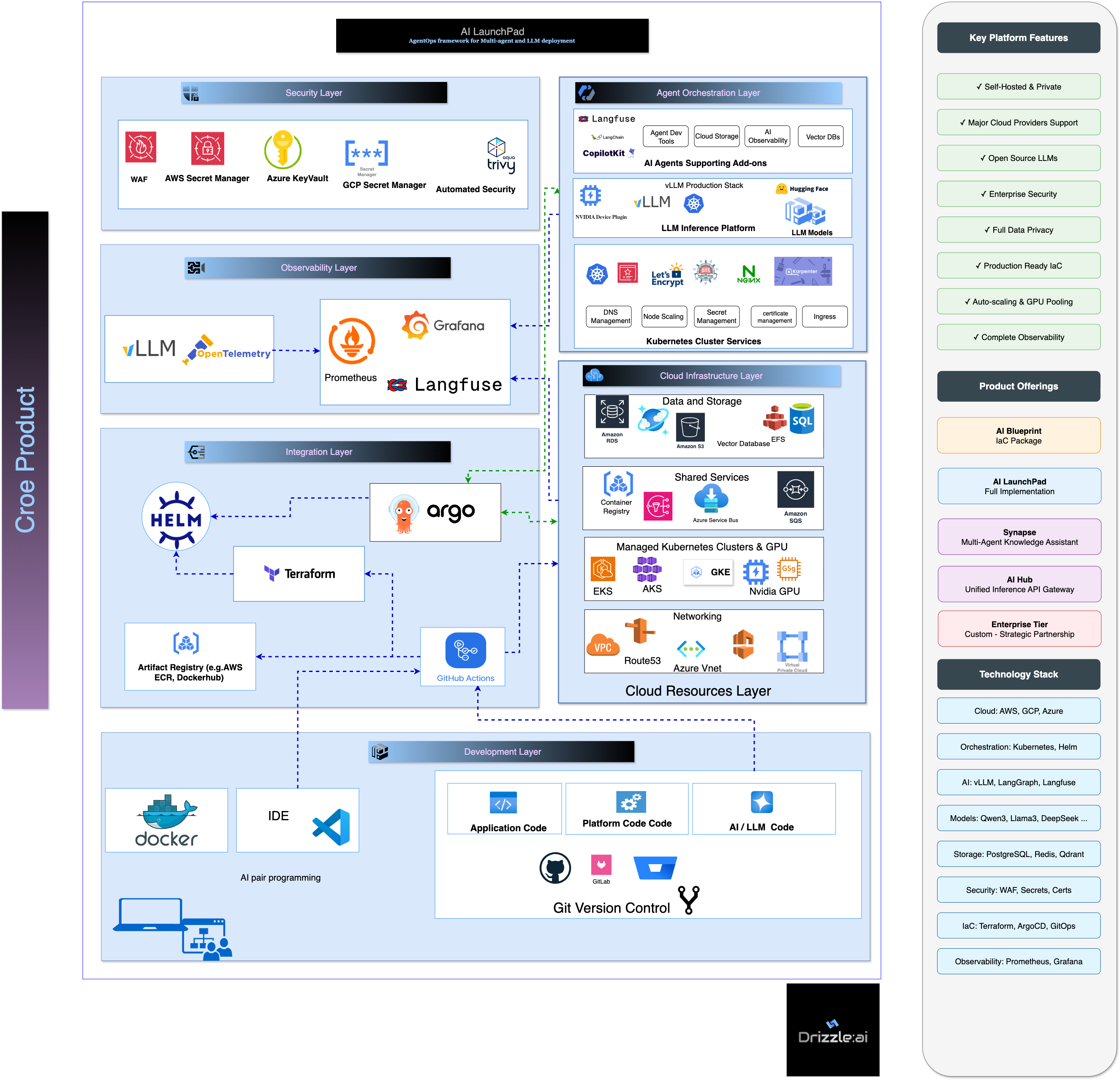

Our AgentOps framework delivers a comprehensive, production-ready solution architecture specifically designed for multi-agent systems and large language model deployment. This multi-layer architecture allows organizations to implement scalable AI infrastructure with built-in security, scalability, and governance.

The AgentOps Framework consists of six interconnected layers, each building upon the previous to create a cohesive, end-to-end AI platform. Each layer is designed with security, scalability, and observability as core principles, ensuring your AI infrastructure can grow with your business needs.

1. Development & Version Control Layer

This is the foundation of all automation and the single source of truth for your entire platform.

- Git Version Control: Developers and AI engineers work in their local IDEs (like VS Code) and commit their Application Code, Platform Code (Terraform/Helm), and AI/LLM Code to a Git repository.

- CI/CD Automation: A push to the repository triggers a CI/CD pipeline (GitHub Actions or GitLab CI) which builds, tests, and packages the code, including creating Docker container images.

2. Integration Layer

This layer takes the version-controlled code and translates it into running infrastructure and applications.

- Infrastructure as Code (Terraform): We use Terraform to declaratively define and provision all necessary cloud resources.

- GitOps (Argo CD): Argo CD continuously monitors your Git repository and automatically deploys any changes to your Kubernetes clusters, ensuring the live state always matches the desired state defined in Git.

- Container Registry: Docker images built by the CI pipeline are stored in a private registry (like Amazon ECR).

- Helm: Kubernetes applications are packaged as Helm charts for repeatable, configurable deployments.

3. Cloud Resources Layer

This is the foundational infrastructure provisioned by Terraform on your chosen cloud provider (AWS, GCP, or Azure).

- Compute & Orchestration: A managed Kubernetes cluster (EKS, GKE, or AKS) with both CPU and GPU-enabled nodes forms the core.

- Networking: A secure VPC (or VNet) provides network isolation.

- Storage: We configure various storage solutions based on need, including object storage (S3), block storage (EBS), and shared file systems (EFS).

- Data and Cache: Managed databases (RDS) and caching layers (Redis) are provisioned to support your AI applications.

4. Agent Orchestration Layer

This is the heart of the Drizzle Systems platform, running on the Kubernetes cluster.

- LLM Inference Platform (vLLM): We deploy a production-grade vLLM stack for high-throughput, low-latency model serving.

- Agents DevTools: Suite of AI Agents supporting Add-Ons that provides the tools to build complex, stateful AI agents, e.g LangChain/LangGraph, Co-Pilot kit, Qdrant VectorDB, S3 Vector, …etc

- Kubernetes Cluster Services: Essential production-grade services including auto-scaling with Karpenter, DNS management via External DNS, ingress control and API gateway for secure traffic routing, load balancing, and service mesh capabilities for inter-service communication.

- Security: Automated security is built-in, using tools like Checkov Trivy, External Secret Operator and SOPS.

- Observability: We integrate Langfuse for deep, end-to-end tracing and observability of your LLM applications, alongside Prometheus and Grafana for infrastructure and performance monitoring.

5. Security Layer

This layer implements comprehensive, automated security controls throughout the entire platform, ensuring your AI infrastructure is secure by design.

- Automated Infrastructure Security: Checkov performs static analysis on Terraform code to catch security misconfigurations before deployment, while Trivy scans container images for vulnerabilities in the CI/CD pipeline.

- Secret Management: External Secret Operator (ESO) integrates with cloud-native secret stores (AWS Secrets Manager, Azure Key Vault, GCP Secret Manager) to automatically inject secrets into your applications without storing them in Git or container images.

- Configuration Security: SOPS (Secrets OPerationS) encrypts sensitive configuration files using cloud KMS keys, ensuring secrets are version-controlled safely.

- LLM Guardrails: Automated content filtering and safety checks protect against prompt injection, data leakage, and inappropriate model outputs through integrated safety frameworks.

- Runtime Security: Kubernetes Pod Security Standards and Network Policies enforce least-privilege access and micro-segmentation between services.

- Compliance & Auditing: All security events, access patterns, and configuration changes are logged and monitored through integrated SIEM capabilities for compliance reporting and threat detection.

6. Observability Layer

This layer provides complete visibility into your AI platform’s performance, costs, and security across all components.

- LLM Observability: Langfuse provides deep tracing and analytics for your AI applications, tracking token usage, model performance, conversation flows, and cost attribution across all your LLM interactions.

- Infrastructure Monitoring: Prometheus collects metrics from Kubernetes, applications, and cloud resources, while Grafana provides rich dashboards for real-time visualization and alerting.

- Log Aggregation: Centralized logging with OpenTelemetry and Grafana Loki enables full-text search across all platform logs for debugging and compliance.

- Distributed Tracing: OpenTelemetry provides end-to-end request tracing across your microservices and AI agents.

- Cost Monitoring: Real-time cost tracking and attribution for compute, storage, and AI model usage with automated budget alerts and optimization recommendations.

- Security Monitoring: Continuous security posture assessment with real-time alerts for policy violations, unusual access patterns, and potential threats.

Consultancy Services Implementation

While our architecture provides a robust foundation, we understand that each organization has unique requirements. That’s why we offer specialized consultancy services to help you implement, customize, and optimize your AI infrastructure:

AI/LLM Platform Advisory

Our experts provide strategic guidance tailored to your specific needs, helping you make informed decisions about architecture, technology choices, and implementation approaches.

Multi-Model Customization & Fine-Tuning

We optimize your AI models for peak performance, ensuring efficient GPU utilization and cost-effective deployment for your specific workloads.

AI Native Assessment

We evaluate your current infrastructure and provide a comprehensive roadmap for modernization and optimization, ensuring you get the most value from your AI investments.

Customized Cloud & AgentOps Architecture

For teams with unique requirements, we design bespoke architectures tailored to your specific AI workloads and business goals.

Legacy Platform Migration

We help you transition from brittle or costly setups to your new cloud-native platform, minimizing disruption and maximizing continuity.

Team Enablement & Training

We provide hands-on training tailored to your stack and roles, ensuring your team has the knowledge and skills to manage and optimize your AI infrastructure.

Conclusion

This layered, automated, and open architecture provides the speed of a managed service with the power and control of total ownership, enabling you to finally bridge the AI Platform Chasm and focus on innovation.

The Drizzle Systems AgentOps Framework represents years of battle-tested experience in deploying production AI systems. By combining Infrastructure as Code, GitOps automation, and comprehensive observability, we eliminate the months-long platform development cycle that typically blocks AI initiatives.

Key Benefits of Our Architecture:

- Rapid Deployment: Get from zero to production-ready AI platform in days, not months

- Full Ownership: Every component runs in your cloud account with complete transparency

- Enterprise Security: Built-in security best practices from infrastructure to application layer

- Scalable Foundation: Designed to grow from prototype to enterprise-scale workloads

- Cost Optimization: Real-time monitoring and optimization recommendations keep costs under control

Whether you’re building your first AI agent or scaling to support thousands of users, our AgentOps Framework provides the robust foundation you need to succeed. The architecture is not just about technology—it’s about enabling your team to focus on what matters most: building innovative AI solutions that drive business value.

Ready to see how this architecture can transform your AI initiatives? Our team is standing by to show you exactly how the Drizzle Systems platform can eliminate your infrastructure bottlenecks and accelerate your path to production AI.

Stop Building Infra. Start Delivering AI Innovation.

Your AI agents and applications are ready, but infrastructure complexity is creating bottlenecks. We eliminates these obstacles with enterprise-grade AI infrastructure that seamlessly integrates into your existing cloud environment—transforming months of deployment work into days of rapid delivery.